You might remember AgNES, the cute little robot head with one eye that would turn to follow bright colours, modelled as a sort of younger (or older?) sibling to Portal’s GLaDOS. Well, AgNES is evolving and growing – this time, AgNES even has a brain. Not a particularly smart brain, but it’s a start.

There are two broad lines of thought informing the design of AgNES. One is the concept of future archaelogy, or how past and present technologies will appear to researchers hundreds or thousands of years from now – how protocols, interfaces, design conventions, and so on, will be accessible to people who will want to study our present society. In many cases, these systems will quite likely have to be reverse engineered to even make them functional, and then the work of trying to understand the social and cultural role they played may be pretty close to a guessing game. The other big line of thought is human deep-space exploration, or how we will adapt to sending people on really long deep space voyages, sometimes on their own and sometimes with other people, trying to make sure they don’t go crazy in the process. AgNES is a little bit of both: it’s a companion robot designed to accompany deep space explorers, but it’s also one that’s probably been derelict for a while and found by some other crew hundreds of years later, without major explanation or indication as to what happened. That also makes AgNES the only mechanism through which one can reconstruct the story, and a little bit of a mystery game to try to understand what happened under its watch.

So there’s a lot going on here, and many things to unpack – most of them driven by this underlying narrative scenario, which then in turn motivates the design and informs many of its decisions. As a companion robot, the voice of AgNES is intended to keep company and provide the crew with some persistent grounding to the real world, by providing them with information or simply entertainment. AgNES can spew out facts and teach about things, but it can also tell stories or read poems. And most of this content is constantly changing, some of it even being randomly generated every time. In a way, it can be interpreted as a science fiction reinterpretation of the tale of One Thousand and One Nights, where Scheherazade earned herself one more day to live every night by telling a new story. Similarly, AgNES is able to keep explorers engaged by providing one more piece of content at a time.

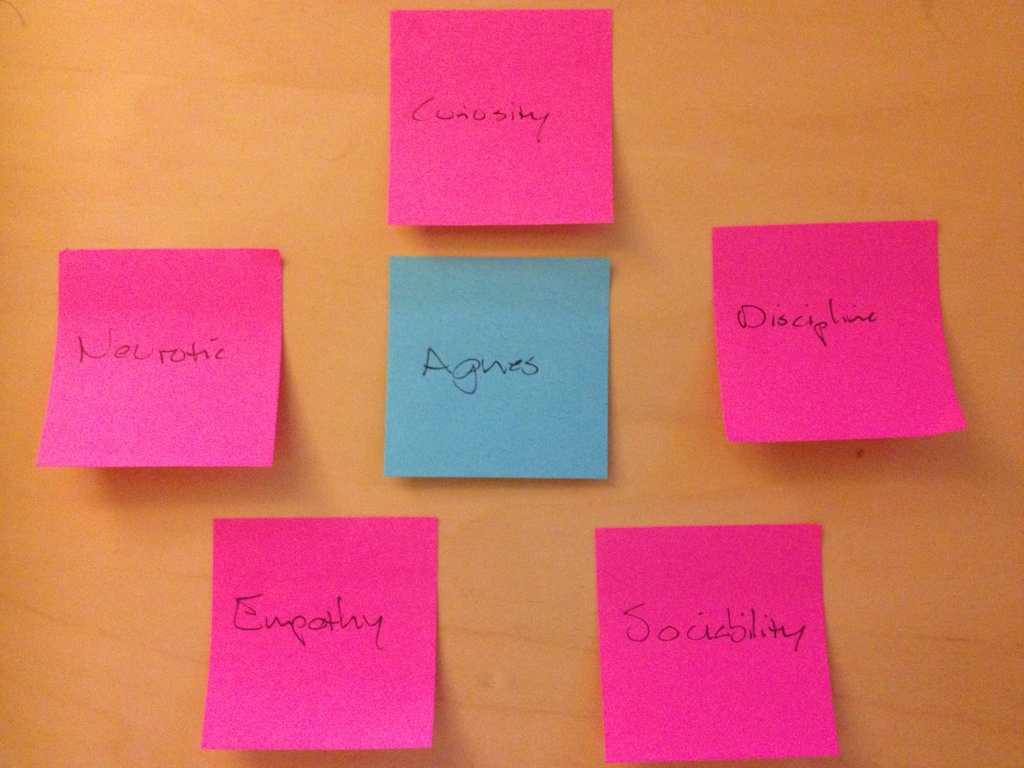

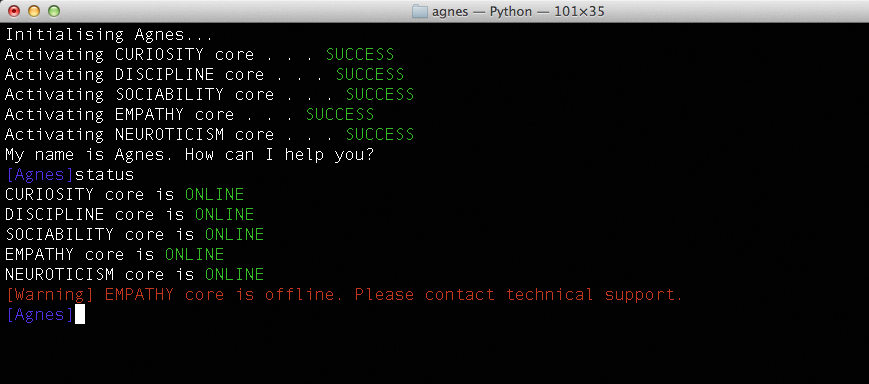

But where AgNES’s design gets really interesting is in her personality. The design of AgNES’s brain is modeled on the “Five Factor Model” (FFM) of personality, according to which personality can be described as operating across five different domains: openness, conscientiousness, extraversion, agreeableness, and neuroticism, with different personally being various strong or weak on any of these domains. AgNES’s personality is similarly modelled on the Five Factor Model, albeit with a few of the trait names modified slightly. Her personality is composed of five independent “cores”, each representing one trait, and each being activated or deactivated independently. What personality cores are active at any given time affects AgNES’s behaviour roughly following the FFM description: if the openness – renamed as “curiosity” – core is turned off, for example, AgNES’s language becomes simpler, as do the stories she will tell. If the conscientiousness – renamed “discipline” – core is offline, she might be willing to comply with some commands that would otherwise be blocked for the user. In this way, you can experience several different versions of AgNES by experimenting with personality core configurations, where each of them might yield different information about herself, her purpose, her design, her creators, or her history.

That’s where the interactive storytelling component comes in. Based on the present configuration, AgNES will give you some information. Under a different configuration, you might be able to explore that information further, or you might get different, even contradictory, information. Since there’s no documentation or knowledge about the context available, there’s really no way to tell – so as the hypothetical future researcher your playing, you can only make your best conjecture as to what’s going on.

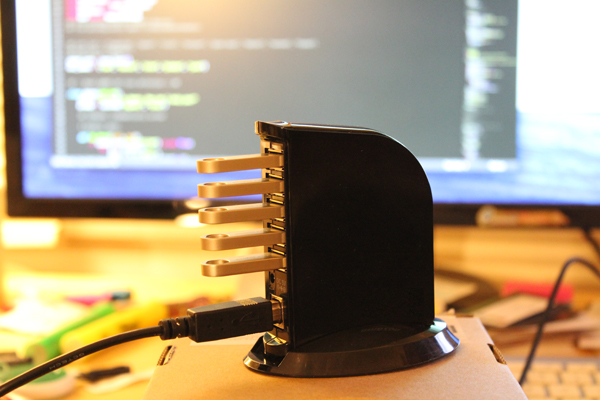

In terms of implementation, AgNES’s brain operates really as just a switch. The brain itself is a standard USB hub, and the personality cores are standard USB flash drives with renamed volume labels to tell them apart. The updated code for AgNES – as always, available on GitHub if anyone’s interested – checks for which cores are plugged in after every command is executed and updates accordingly. Some commands will simply be nonresponsive unless the right configuration is set, while other will exhibit different behaviour and information. The way many of the commands are set up is so that they will always give the user new information in randomised fashion: for instance, the til command (or “today I learnt”) will randomly fetch and read out the summary to a random Wikipedia article, assuming all cores are in place (if the Curiosity core is off then the command does the same, except pulling from the Simple English Wikipedia). Other commands pull information from other sources around the web, especially randomised text generators: haikus, Post Modern randomly generated articles, FML tweets, and so on. The code pulls a source, parses the webpage, extracts the desired bit of text and then passes it over to the Mac’s built-in text-to-speech synthesizer to read out loud.

The result is often funny, and often awkward. It’s when commands break or fail to work as expected that crucial information about AgNES is revealed – when it forgets who it’s taking too and calls you by one of her developer’s names, or when it inadvertently reveals how to get access to some crucial piece of information. Working on this breadcumb trail is what’s probably going to be the highest priority item for the next and final iteration, so that the AgNES simulation is actually “playable.” Additionally, I also need to improve how rules are manages in determining what operation should be based on the active cores, and I need to clean up the interface further still – as well as integrating this iteration with the previous one, to actually have a moving, animated object to correlated with the synthesized voice you get from the computer.

I’m already getting really good feedback to consider for the final iteration – so any comments or suggestions are more than welcome! Also, I’ll try to get a video up soon to show how it actually operates, as it’s a little difficult to reproduce independently right now.