Monthly Archives: October 2013

Assembling AgNES, Or How I Read Science Fiction

Definitely the hardest part about working on Agnes so far was trying to come up with some backronym for the name. But I think I’ve finally found something: A(u)gmented Narrative Experience Simulator – AgNES.

As the sole representative of the MIT Comparative Media Studies program in the SciFi2SciFab class, my approach at building prototypes and exploring science fiction themes is likely quite different than that of people at the Media Lab. For starters, I don’t have a background on engineering or computer science, and my “making things” experience is limited. Which has made the class quite an interesting challenge, especially in trying to adapt what the experience of science fiction design means from my point of view.

That’s perhaps why my approach at building something like AgNES takes more of a narrative approach, or is perhaps better framed around larger themes involving science fiction and the exploration of possible futures. Even though I began thinking about AgNES as a version of GladOS from portal, in the process of working out the code and figuring out the design I ended up thinking a lot more about the possible narrative implications of what I was doing. In the game Portal, GladOS has been left behind by herself in an abandoned facility (after she killed most of the people there with a neurotoxin gas), and all of the information we receive from her own history and background comes either from direct interaction, or from subtle clues the designers of the game actually laid around hidden areas in the game’s levels. I started thinking about it from the point of view of far-future archaeology, where someone might find a facility and a system such as this one thousands of years into the future and then start interacting with it, trying to figure out what was going on at the time, and what its designers were thinking.

If you think about it, most of the technology we use nowadays is fairly well documented – most of the stuff that has been widely used over the last hundred years or so can be traced roughly to where it came from and put into its historical context. If we go back a few hundred years, things begin to get murkier, and if we go back a few thousand, we begin to rely on archaeology to figure things out. So even though we’re able to understand our current technologies, thousands of years from now some people looking back at this time might not have such an easy time. For the most part, even trying to go back a few years to read some data stored on floppy disks can be quite the challenge nowadays. If you account for the evolution of storage media, operating systems and programming languages, interface design principles, and so on, our present technology might be entirely inaccessible to someone from the future. And while a clear chain of influence and evolution can be mapped, at some point, given enough variation, that might cease to be the case.

So that all ended up flowing into the design for AgNES. First of all, how would the design stand on its own to someone using this system, without having access to any information like context, purpose, background, and so on? How could one make inferences as to any of these things strictly from what was provided through the interface? And conversely, how could one exploit this information vacuum for narrative purposes? How can I construct a story about AgNES and convey it to the user strictly through interface and interaction elements?

In other words, the point of playing around with AgNES is figuring out a way in which it provides information to the user bit by bit, which the user can then put together to reconstruct the origin, purpose and story behind this system. It then becomes something of a mystery game, but just as in archaelogy, it is not a game of precise inputs and outputs. The user/player can only draw conjectures based on the available information, but the system itself cannot speak its “truth” and fully resolve what’s going on behind the scenes. While looking at the code behind the system might be useful and helpful, that doesn’t necessarily mean all the elements are contained in it to solve the mystery.

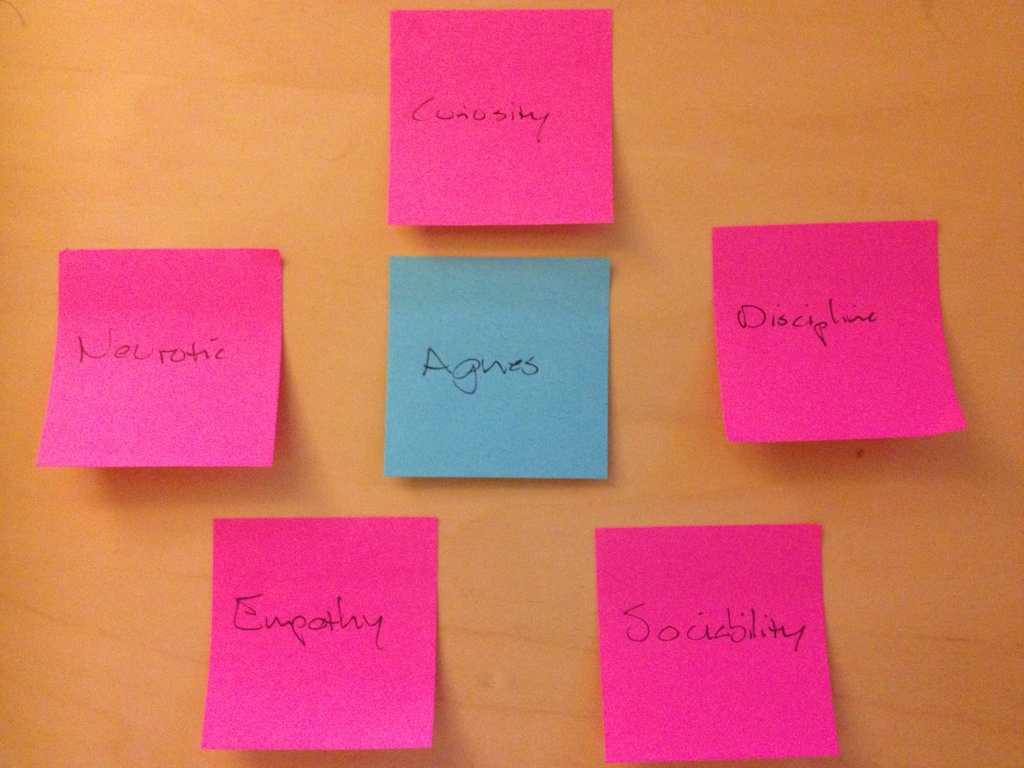

AgNES has become sort of a game, and games are essentially complex information systems where the purpose is to strategically conceal information from the player in a way that he or she is driven to uncover that information through the mechanics of the game. The difference is that the objective of this game is only to understand its parameters, yet the feedback mechanism is such that you can never fully know whether you have done so (a bit perverse, perhaps). You can only get closer and closer, testing things out to see what happens: while the original design had five entirely different personality cores that were interchangeable, the new design is closer to GladOS in being built around five cores which provide aspects to one whole personality. The cores can be deactivated, thus affecting the way in which AgNES operates, and prompting her to reveal different pieces of information depending on the active combination of cores. The current design is now drawing from many things we’ve been looking at in class: while Portal remains the main source, there’s a little bit of The Diamond Age’s Primer and ractors in thinking about the purpose AgNES could serve (while it also now has a new confession mode borrowed from THX1138). The personality side of AgNES also bears some influence from the Neuromancer/Wintermute conflict between artificial intelligences.

It’s a somewhat different approach at the intersection between science fiction and speculative/critical design, but hopefully an interesting one. The code for AgNES remains available on GitHhub, and hopefully in the near future it’ll be somewhere where it can be tested to see how people react to it. A new demo is also posted below, this new iteration built in collaboration with Travis Rich at the Media Lab.

Project LIMBO

What is LIMBO?

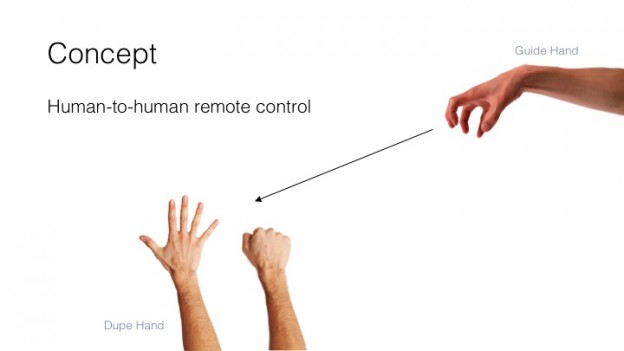

LIMBO stands for Limbs In Motion By Others, a tech demonstration of how to control other humans remotely, via digital interfaces.

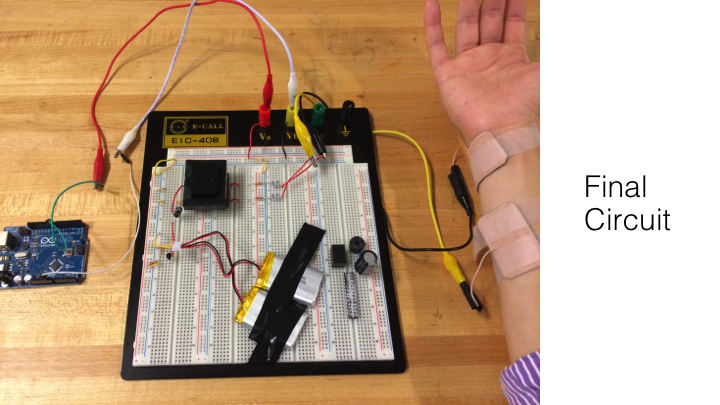

The concept is simple: first, you need two people. We start with a guide–the person who wants to be in control–he or she can control by sending a signal from any digital interface imaginable: a software UI button, a sensor controller, a hand gesture to a computer, for example. A special glove is worn by another person far away (this person, we call the dupe) that can be triggered to control muscle contractions. So, it’s that simple, the guide controls the movements of the dupe, far away via digital interface.

What we’ve created is one specific scenario implementation of this concept. Here’s how it works

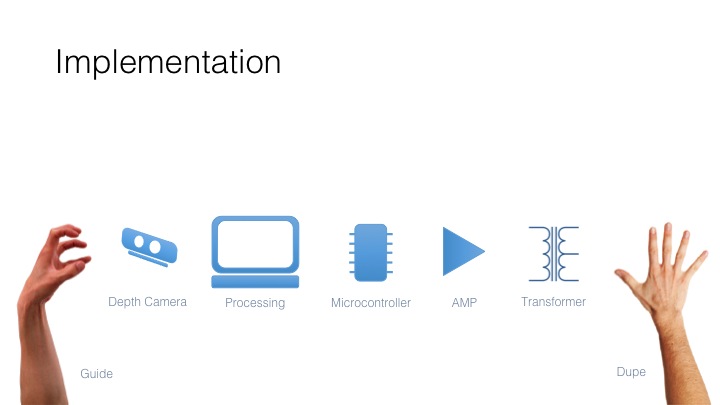

- Analyze the openness of the fist of the guide using the Creative Interactive Gesture Camera and computer vision techniques provided by Intel’s Perceptual Computing SDK, which gives us some information about the guide’s hand position and state.

- When we detect a palm from the guide, we can send some information to the dupe about the openness of the guide’s hand.

- The dupe is wearing a glove with electrically conducting pads. Using principles of functional electrical stimulation (or FES) for short, we can send a current through the dupe’s arm to activate specific muscle contractions in the dupe‘s hand.

- What we’ve demonstrated is effectively the mapping of the grasp of one person’s hand, directly to another’s.

Here’s a video of LIMBO in action

Ermal Dreshaj and Sang-won Leigh

Science Fiction Books that Accurately Predicted the Future

An article on Huffington Post: http://www.huffingtonpost.com/2013/10/18/sci-fi-books-future_n_4117268.html

reconfigurable mazes

mentioned this project in class last night:

http://www.northpitney.com/works/maze/maze.html

and another: http://www.designboom.com/art/kinetic-moving-maze-by-nova-jiang/

Are You a Cyberpunk?

I promised I’d post this. io9 article has some histoty on R. U. Sirius, MONDO 2000 and a link to the OmniReboot.

http://io9.com/are-you-a-cyberpunk-this-early-1990s-poster-explains-i-1231691511

Playfic: Interactive Fiction Platform

How Science Fiction Changed These Science Fiction Authors’ Lives.

I especially enjoyed the discussion of A Wrinkle in Time.

http://www.sfsignal.com/archives/2013/10/mind-meld-how-science-fiction-changed-our-lives/

Lucasfilm’s Ractor Stage

Rodent Sense

Inspired by the protagonist in Kill Decision’s reliance on a trained pair of ravens (as opposed to drones), and by the SimStim in Neuromancer that allows Case to tap into the sensory experience of another, the Rodent Sense project links its wearer to the world of animals.

We drew on Umwelt theory to imagine how a human might make sense of the world if given the opportunity to to switch between various animal sensory inputs and augment (or diminish) their senses in particular ways. For the demo, we focused on allowing the viewer to see through the eyes of a hamster.

As hamsters can only see 2 inches in front of their eyes, the view offered to the wearer is a quite distorted one. To create this experience, we attached stereoscopic cameras to a carriage and hamster-ball device that the hamster pulled, and processed the resulting video feed so that it could be seen by the viewer in 3d while wearing an Oculus Rift. The carriage and wheels were laser cut from 5mm mirrored acrylic and 25mm clear acrylic.

We also recorded fictional promotion material for our product:

Thank you for purchasing the Rodent Sense™ Virtual Reality expansion pack. Now you can have the sensory experience you’ve always wanted.

For example, with the Rodent Sense™ pack, you can focus sharply on objects that are two inches from your nose, perceive time in slow motion, and feel infra red lights. With the Reptile Sense™ pack you can hear through vibrations and discern the body heat of others. And, if you’re feeling adventurous, why not see through the hundreds eyes of the sea scallop with Aquatic Sense™.

Setting up is easy. First, catch an animal or grow one in your household hatchery. Next, simply attach the Sensory Transmitter™ to your desired animal. The Transmitter will anchor to the neural system of its host and manifest pathways necessary for wireless communication. Initiation will take a few days. Once your augmented animal system is ready, you can begin to immerse yourself in this animal’s senses using your VR unit.

Collect multiple expansion packs to switch between a fleet of your favorite animals. If you want to experience our new, multiplayer product, try Swarm Sense™. You and your friends can experience what it is like to be part of a hive of bees, a school of fish, or a flock of seagulls. Experience communication on an animal level.

Warning: Death of host sometimes occurs during Sensory Transmitter™ installation. If you a experience a sensory malfunction lasting more than 4 hours, call a doctor. Sense Co. will not be held responsible.

Sense Co.

Feel Everything