Over the course of the semester I’ve been iterating on the original idea for AgNES. Originally, it was meant to be an implementation based on Portal’s snarky and evil artificial intelligence, GLaDOS, following a roughly similar design and trying to mimic the functionality. The first demo was a first attempt at using the Mac’s built-in text-to-speech synthesizer to “sing” the Portal ending song, “Still Alive”. The second iteration took a more tangible approach, and with the help of Travis Rich from the Viral Systems group at the Media Lab, we were able to put together a physical manifestation for AgNES: a small robotic head capable of tracking a user’s movement when tagged by bright colours. The head – a small cardboard box holding an Arduino board and a webcam, mounted on a small servo – was controlled by an attached computer that processed the image from the camera, looked for the desired colour within a treshold in the frame, and commanded movement accordingly. The third iteration, just a couple weeks ago, revisited the software component of it and already started going in a different, more sci-fi-ish direction: AgNES became a sort of companion robot for long, solitary travel – essentially, deep-space exploration – which could provide a grounding helpful voice to an imaginary traveller. By giving the traveller stories, facts, and various other voice-mediated interactions, the companionship of AgNES can keep the traveller grounded and relatively sane over long journeys. But AgNES’s personality also became something the user could interface with: based on the five factor model for the description of personality, AgNES’s own personality is made up of five independent cores that can be individually turned on or off. How the cores are configured has an effect on the output the user gets from the various commands, and one can thus experiment with different configurations to get different results.

By playing around with AgNES’s personality, one is also playing with the conditions necessary for its optimal functioning. Which means that as results vary, some cracks in its design are revealed: in the confusion, AgNES begins to unintendedly give out clues as to the identities of its designers and its operators. The user can then follow this clues to learn more about this design and better understand the purpose of AgNES and its intentions. This is grounded in yet another science fiction underlying narrative: how future individuals will react to and interact with technologies from the future past.

Future Archaeology and Deep-Space Exploration

Even just today, we’ve already accrued a significant technological past that is hard to access and explore. Floppy disks are a good example: if you stumbled upon a box of old floppies from years ago and wanted to browse for meaningful things within them, getting to that data would be really complicated. If the disks are functional and you can find a drive to read them, there’s still the matter of whether the data is uncorrupted and whether you can still get the software to read it. Not impossible, of course, but as time goes on, increasingly complicated.

Future researchers, probably deprived of access to instruction manuals and other reference materials that help us situate past technologies, will contemplate our present technological world trying to make sense of it just as we look back on archaeological remains and try to make well-informed conjectures about what objects were used for or why they were designed one way over another. While we make an effort to design technologies that are intuitive to use, this intuitiveness is anchored at specific moments in space and time. Thousands of years from now, when behavioural patterns become very different, it is plausible to assume that many of the design conventions in use today will no longer have the same effect. Future archaeologists then face a complicated task of reconstruction.

That is the narrative framing where AgNES comes in. AgNES is designed from the point of view of being this deep-space exploration companion; but as a narrative device, it is also about thinking what would happen if thousands of years from now, future researchers came across this device built only hundreds of years from now. What sense would they make of it? How would they understand it, after stumbling upon it floating through space in a derelict ship, perhaps still powered but no longer in the company of any travellers? In the first of the many Star Trek movies, the crew of the Enterprise stumbles upon a massive entity threatening Earth called VGER, which upon closer inspection turns out to be the Voyager 6 probe, found by an alien species who augmented its design to enable it to fulfil its mission to “collect knowledge and bring it back to its creator”, creating a sentient entity on its way back to Earth in an unrecognisable form. These technologies we’re unleashing on the universe may at some point cycle back and be found again, and it’ll be a challenge to interpret them and make sense of their original context.

AgNES and Meta-AgNES

AgNES works on two levels. As a “present day” object, it is this pseudo-artificial intelligence that provides company and grounding during deep space travel, with a customisable personality the user can modify. AgNES’s commands are limited but they provide different forms of entertainment to keep a user distracted over what would be, presumably, very long sessions of just floating through space. The design of AgNES draws from multiple science fiction sources: the already mentioned Portal was the chief one throughout, but other sources such as Arthur Clarke’s 2001 and its own evil AI, HAL9000, also had a big influence. As did many of the themes we discussed in class related to artificial intelligence and robotics (including such things as Neuromancer by William Gibson, or Do Androids Dream of Electric Sheep? by Phillip K. Dick). As a companion providing information, there’s also certainly some influence from the Illustrated Primer technology found in Neal Stephenson’s The Diamond Age.

As a “future day” object, the design of AgNES is populated with a series of clues that only become evident to the user when they begin playing around with the personality configuration. Deactivating certain personality cores opens up areas that would otherwise be forbidden to an “unauthorised” user, where they may find information that can later be explored more in detail using additional commands. The “future day” user can then put together these pieces of the puzzle to come up with their own conjecture as to what AgNES is, where it came from, who it was with, and how it came to be where it is. From this point of view, AgNES plays more like a game where you’re trying to decipher what’s going on with this object by interacting with it, drawing inspiration primarily from the text and point-and-click adventure games especially popular in the early 1990s. The big caveat, however, is that there’s no real resolution to the game: there’s no “win state” as such, and there’s no real correct answer – you can only get as far as the conjecture you draw from the information you received as to who was involved and what happened. Just as future researchers, you can never be fully certain whether your conjecture was actually the case.

Pay No Attention To The Man Behind The Curtain

Technically speaking, AgNES is not incredibly complex. There are two pieces running simultaneously. One of the code for AgNES itself, written on Python and managing all the commands, the UI, and the personality cores. The cores themselves are five USB sticks and a hub that are together used as a switch – the code detects which cores are plugged in at any given time and makes changes accordingly. The first versions of AgNES used the Cmd Python module for a simple command-line interface, while the final iteration uses Tkinter to instead have a simple GUI that is less prone to error and displays information more clearly. AgNES’s commands are highly dynamic, and they often pull randomised content from various sources around the web based on the information desired: for instance, under normal operations, the TIL (“Today I Learnt”) command will pull a random article from Wikipedia and then read the summary out to the user. If the Curiosity core is turned off (meaning a reduced openness to experience factor, signaled by a more limited use of language) the command does the same, but pulling from the Simple English version of Wikipedia. If, instead, the Empathy core is turned off, the system pulls a generated text from the PoMo (Post Modernism) generator and reads that out – without empathy, AgNES loses any regard for the user actually understanding the information. And so on. Not all core combinations are meaningful, but those that are pull and parse content from the web using the BeautifulSoup web scraping library.

The other pieces is AgNES’s head, described above. The setup remains the same, with the box containing an Arduino board and a webcam, all of it mounted on a small servo. The image handling is done with Processing, going over a frame of the image, finding the desired colour (Post-It pink, so it can be as unambiguous as possible) and making sure it stays within a center treshold – if it falls outside of that, it signals the servo to move left or right until it readjusts.

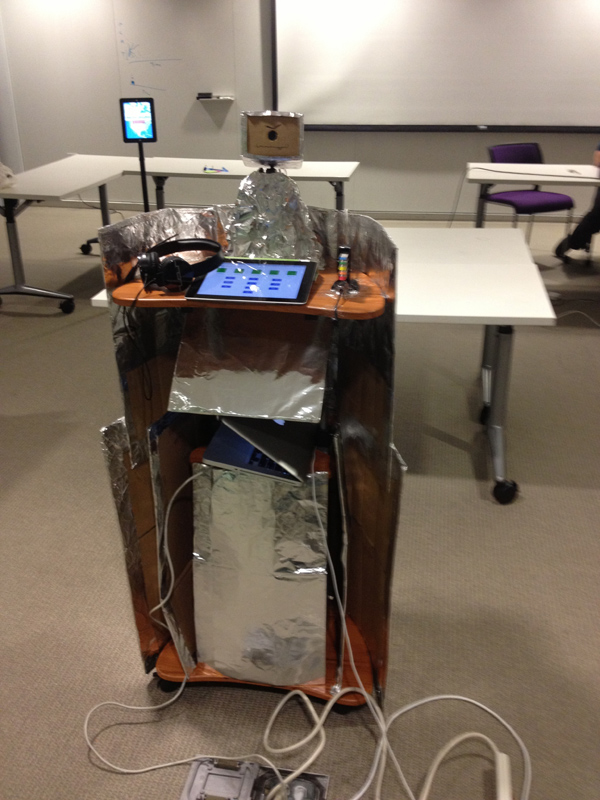

For its final presentation, both pieces were running of a Mac Mini concealed within a stand, “decorated” to appear as if it was a 1960s sci-fi B-movie prop (meaning, lots of aluminum foil). Using an app called Air Display, the mini was using an iPad as an external display with the AgNES GUI running, making it touch-enabled very easily. (I really wanted to try to get everything running off a Raspberry Pi but it proved to be too much for this iteration. The code for AgNES itself runs OK, but the computer vision and the text-to-speech stuff would’ve been more complicated to pull off, though not impossible – just needed more time!).

Building AgNES has been great, and especially interesting to think through its implications and the underlying concepts and issues at stake. All of the code for the project is available on GitHub if you want to try it out (though replicating the specific setup might be a bit complicated). Special thanks to everyone who contributed feedback, ideas, and testing, and any comments to improve it are more than welcome!